Recently the term of “Artificial Intelligence” infiltrated the titles of many news stories. Self-driving cars, computer programs playing Atari games better than humans can do, AlphaGo beating the world champion in the game of Go, tactical experts beaten by computer programs in combat simulation and many other recent achievements make us believe that we are on the verge of Technological singularity. However, an experts in machine learning and artificial intelligence are slightly more skeptical, for example: “Why AlphaGo is not AI”, “AlphaGo is not the solution to AI” and “What counts as artificially intelligent? AI and deep learning, explained”. Deep learning made it possible to train complicated models for various perception tasks, such as speech and image recognition, but we are still to see a model that will show a deeper understanding of the world and that can reason beyond the explicitly present information. In particular, one of such tasks where machines still lag far behind from what we would call intelligence is text understanding. Despite the tremendous success of IBM Watson defeating best human competitors in the Jeopardy! TV show, the techniques used to generate the answers are rather shallow and are still based on the simple text matches1.

Oren Etzioni, CEO of Allen Institute of Artificial Intelligence (AI2) said “IBM has announced that Watson is ‘going to college’ and ‘diagnosing patients’. But before college and medical school — let’s make sure Watson can ace the 8th grade science test. We challenge Watson, and all other interested parties — take the Allen AI Science Challenge.”

In October 2015 AI2 invited2 the researchers around the world to participate in the Allen AI Science Challenge hosted on Kaggle.

Data

The dataset provided for this competition contains a collection of multiple choice questions from a typical US 8th grade science corriculum. Each question has four possible answers, only one of which is correct. The training data contained of 2,500 questions, validation set - 8,132 questions and test set - 21,298 questions. Unfortunately, the exact dataset was licensed for this challenge only and was removed from the Kaggle website. AI2 released3 a smaller datasets of similar nature on their website.

Here is an example science question:

Which of the following tools is most useful for tightening a small mechanical fastener?

A. chisel

B. pliers

C. sander

D. saw

The strategy of memorizing answers to the questions from the training set wouldn’t be very helpful for answering new unseen questions. Therefore, a question answering system has to involve an external data sources. The rules of the challenge allowed participants to use any publicly available dictionaries and text collections. As examples, organizers recommended the following list of knowledge resources and textbooks. Most of the text corpora, such as Wikipedia, are usually quite big, and, therefore, one needs to preprocess and index these text documents in order to use them subsequently in the question answering system. There is a large number of open source search systems available, and Apache Lucene is one of the most popular.

Task and Evaluation

The task was to return a single answer (A, B, C or D) for each of the test questions. The official AI Challenge performance metric was the accuracy , i.e. the fraction of the correctly answered questions. Therefore, everybody could at least get a score around 0.25 by rangom guessing.

Oh, did I forget to mention money? 1st Place - $50,000, 2nd Place - $20,000, 3rd Place - $10,000.

Results

The final states of the public and private leaderboards differ a little bit. As we can see, the winners were able to get the accuracy of 0.59308, which is not even D in the US grading system.

Baseline

Organizers provided a baseline approach, which is based on using Lucene over a Wikipedia index and achieved a public leaderboard score of 0.4325. The idea is to query the index with a concatenation of the question and each answer and compare the retrieval scores of the top matched document. There are multiple variations of the baseline, e.g. using different retrieval metrics, using top-N document scores, etc. One particularly useful idea is to index paragraphs instead of the full documents. Wikipedia documents are usually long, but in order to answer a particular question we would search for for a specific fact, that is most likely expressed within a single passage or even a sentence. Another direction for the improvement would be incorporating additional text collections, such as CK-12 textbooks and other science books, flashcards, etc.

Approaches

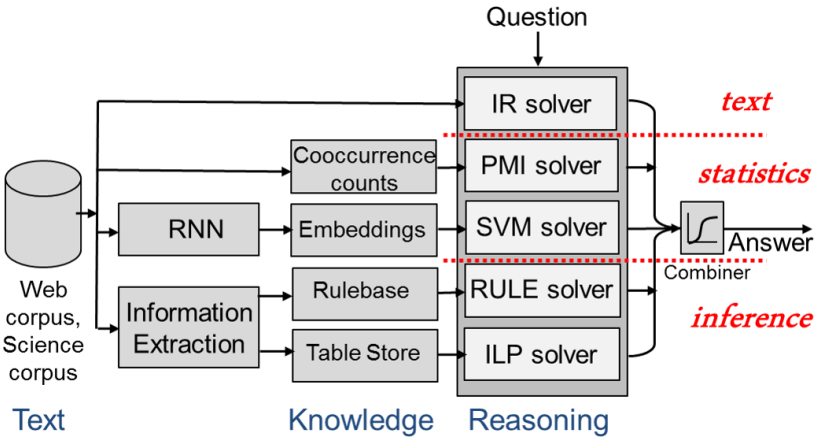

Many insipirations for the participating models were drawn from the research paper “Combining Retrieval, Statistics, and Inference to Answer Elementary Science Questions” by Peter Clark, Oren Etzioni, Tushar Khot, Ashish Sabharwal, Oyvind Tafjord, and Peter Turney, which appeared at AAAI, 2016. This paper focuses on 4th grade science exams as a target task, but can obviously skip a couple of years and be applied to 8th grade exams. The described system represents an ensemble of 3 different layers of knowledge representation: text, corpus statistics and knowledge base.

- Text-based module is an implementation of Information Retrieval (IR) approach, which is based on the idea that the correct answer terms are likely to appear near the question terms. In the described implementation Lucene created an index of sentences from the text corpora (CK12 textbooks and crawled web corpus). Each answer is scored based on the top retrieved sentence that mention at least one non-stopword from question and answer. Lucene implements basic TF-IDF and BM-25 retrieval score models, which doesn’t account for matched term proximity. There are more advanced models, such as Positional Language Model or Sequential Dependency Model. Unfortunately, we aren’t aware of any experiments with such models during the challenge.

- Corpus statistics-based module takes the idea of question and answer term coocurrences to the next level. Rather than scoring the answer by the top retrieved sentence, one can consider the statistics based on the whole corpus. Pointwise Mutual Information (PMI) is one way to measure associations. This module scores each answer using average PMI between question and answer unigrams, bigrams, trigrams and skip-bigrams. Another implemented approach, that falls into this category is based on cosine similarities between question and answer word embeddings. Many competitors used pre-trained word vectors, such as word2vec or Glove. The paper describes a couple of ways question and answer can be represented (average pairwise cosine similarity of terms, or sum of term embeddings), and multiple similarity scores are combined together using a trained SVM model. This approach alone could achieve a score in a range of 0.3*, but it was useful in a combination with other techniques. Embeddings are usually built using the idea that similar words are used in a similar context. Another related approached, described on the Kaggle forum used distances between question and answer terms in a corpus (Wikipedia) to score each candidate. The described heuristic led to a score of 0.39.

- Knowledge base module contains two approaches: probabilistic logic rules and integer linear programming. Rule-based approach works over a knowledge base, extracted using a hand-craften set of rules, that look for cause, purpose, requirement, condition and similar relations in science corpus. You can see an example of rule and extracted knowledge on page 3 of the paper. After such knowledge base is constructed, a textual entailment algorithm is used to score question-answer pair by attempting to derive them from this knowledge. The last approach of the paper operates over a set of extracted tables, each of which correspond to a single predicate (e.g. location). In this approach question-answering is treated as a global optimization over tables using integer linear programming techniques.

The paper describes ablation experiments, that study the importances of each of the components. The results suggest that PMI and IR-based components have the strongest effect on the overall system performance. However, there are certain types of questions, that are better handled by knowledge base approaches, and therefore the combination of all components significantly outperforms each of them individually.

Winners

The official summary of the Allen AI Science Challenge results are published by Allen AI in their “Moving Beyond the Turing Test with the Allen AI Science Challenge” paper. There is no surprise that the winning approaches represent a big ensebles of different features and approaches. Most participants emphasized a big role of corpora, and all winning systems used a combination of Wikipedia, science textbooks and other online resources. Unfortunately, we still don’t have a good tool for deep text-understanding and reasoning, as shallow IR-based techniques turned out to be among the most useful signals, and as pointed out by the winner, alone reach the score of 0.55.

Organizers asked the winning teams to open source their approaches, which they did and we can dig deeper into their implementations:

- Cardal: https://github.com/Cardal/Kaggle_AllenAIscience

- PoweredByTalkWalker: https://github.com/bwilbertz/kaggle_allen_ai

- Alejandro Mosquera: https://github.com/amsqr/Allen_AI_Kaggle

Conclusion

Allen AI Science Challenge demonstrated that there is still a long way to go before automatic question answering system will be able to reason about the world. Approaches, that try to work with structured representation of knowledge currently doesn’t perform as good as relatively simple information retrieval techniques on average. In the challenge overview paper Allen AI mentioned that they are going to launch a new, $1 million challenge, with a goal of moving from information retrieval into intelligent reasoning. Let’s keep track of news from Allen AI2 by following their Twitter!.